Background

The deterioration of patients on the non-ICU wards of a hospital is a serious issue. Although there is a low incidence, the effects are large, leading to prolonged length-of-stay, transfers to the ICU ward or even death. To counter this, the Radboudumc and many other hospitals in the world use an early warning system. Currently, the Radboudumc uses MEWS, or Modified Early Warning Score, which requires a nurse to measure five vital signs of a patient, namely the respiratory rate, heart rate, systolic blood pressure, the core temperature and the level of consciousness, after which the system will output an alarm score that indicates the risk of deterioration for said patient. The MEWS uses discrete bins that are based on expert opinion and the output value is based on the bins in which each of the vital sign values fall. Due to the labor-intensive measurements and the large amount of beds on a ward, these vital signs are usually only measured three times a day, providing the clinicians with limited and often out-of-date information to make decisions upon.

One of the problems with this MEWS score is that it does not discriminate between patients. The normal value for one patient is a critical value for another and a critical value for one patient can be a relatively normal value for the other. It also does not take into account the vital sign history of a patient, which is assumed to be highly indicative of deterioration. Also, the threshold for each bin is chosen arbitrarily, based on the values of a large number of patients and not adapted to fit each individual. These arbitrary and hard cut-offs cause a patient that for example has a temperature of 38.1 to be in a higher bin and thus to have a larger risk score than a patient with a temperature of 38.0 degrees Celsius.

As MEWS just uses the sum of the bins for each vital sign rather than some sort of weighing, all vital signs are considered to be equally important in predicting patient risk while this is an assumption that is not at all supported by the existing literature, as for example a change in conscious level would be more indicative of clinical deterioration than a change in temperature and should therefore influence the risk score more gravely. These reasons cause the MEWS to wield a large number of false alarms for healthy patients, which will cause ‘alarm fatigue’ in the nurses as they have to constantly recheck each patient that raises an alarm. This will likely result in nurses ignoring alarms for patients that look healthy, using their intuition rather than the implemented system, rendering such a system with a large amount of false alarms effectively useless. An alarming system that limits the number of false alarms will help the nurses to be able to respond more accurately to any alarm that they do receive.

All of the aforementioned reasons helped us decide that we should find a system that is better than MEWS in detecting patient deterioration, as the current system is incapable of detecting deterioration accurately and at an early stage.

Research question: "How feasible is it to create a system that accurately predicts early clinical deterioration in patients in real-time while limiting the number of false alarms and does this in an explainable manner by providing information about the features that contribute to this prediction and the features that can be left out".

Methods

For my Master thesis, I have developed several ML models to predict whether that patient is likely to deteriorate in the next couple of hours based on their current vital signs, measured once per minute, their immediate vital sign history, admission information like whether the patient was admitted to the surgery ward or not and whether it is a high-risk admission and their characteristics like age, gender and BMI. Several studies have shown that for example cardiac arrests and unplanned ICU transfers are preceded by changes in vital sign values that can be detected hours before the actual event. Detecting these events at an early stage leaves sufficient time for the clinical staff to prevent such an event, which could severely reduce patient morbidity and mortality. The models are trained on the data of 1530 patients from the internal medicine, surgery and rheumatology wards of the Radboudumc with the measured vital signs being things like heart rate, diastolic blood pressure and skin temperature. To counter the number of missing vital sign measurements in the data and to model the changing of a variable over time, we decided to use time windows of 30 minutes rather than one minute and used statistics like the mean, the standard deviation, the minimum, maximum and skewness of a vital sign within these 30 minutes as the features for that vital sign. A sliding window approach is used to predict events not only based on the current time window but also on the three time windows before that in order to detect temporal changes in the data. We have also used balancing to ensure that the events are as often occurring in the training data as the non-events which is by default not the case. As the number of useful events is still quite small, we decided to not predict whether an event occurs during the current time window, but whether an event will happen in the next hour, three hours, six hours, twelve hours and twenty-four hours after the current time window, which is also a more useful prediction for a clinician as actions can be taken to prevent such an event.

Numerous research has already been done on this topic, with many of the papers reporting models that outperform the current early warning scores. These models are trained on a large amount of data, more than we have available. However, we found that all of these reported models aim to predict an objective definition of clinical deterioration, like death or cardiac arrest, while we tried to predict a far more subjective but our opinion more complete definition of deterioration. We decided to base our definition not only on endpoints like death and cardiac arrest but also indicators of these events happening in the future, like antibiotics or a CT scan being administered to a patient. This would help to predict clinical deterioration earlier in time and therefore allow for earlier and often less severe interventions, before a patient has deteriorated heavily. We have decided to create multiple definitions for clinical deterioration as it is unclear which specific actions and complications are true indicators of deterioration.

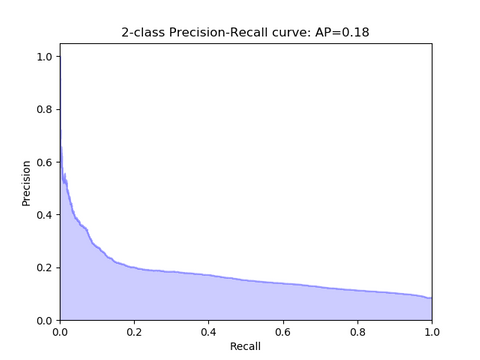

The main goal was for our model to have a high performance on a small amount of imbalanced data and be explainable, and for that, we decided to use three machine learning models, namely logistic regression, random forest and neural networks. We have decided to use three different model types, all with different amounts of explainability, to discuss the trade-off between performance and explainability. We originally wanted to also use support vector machines, one-class support vector machines and relevance vector machines, but we found that training them took too long on our data. We used the AUPRC as our evaluation metric as we believe that it is a better indicator of model performance when the data is imbalanced than the more often used AUROC.

Results

We found that the models are seemingly better able to predict clinical deterioration when a broader definition of clinical deterioration is used to train and test the models, and that the performance of the trained models also increases when it has to predict further into the future. We found that our models, especially the logistic regression models, were able to outperform the existing early warning scores, with the best model achieving an AUPRC of 0.18 when predicting 24h into the future. However, we also found that many of the models had a lot of false positives, although fewer than the early warning scores, which is likely caused either by noisy data or by a definition of clinical deterioration that is not adequate enough. The large amount of false positives means that it is unlikely that our trained models are usable in practice. However, we believe that even a small increase in performance over the currently used early warning system could reduce patient morbidity and mortality.

There are ample avenues for future research. First of all, we could gather more data so that the model can see more and more different patients during training. Furthermore, we should focus on getting an adequate definition of clinical deterioration as a model cannot learn to detect deterioration with a sub-optimal definition. We could increase the number of interesting features by for example incorporating lab tests or looking at the frequency domain rather than the time domain. Naturally, we could use different models, for example an LSTM which explicitly models the passing of time or improve the manner in which we determine the optimal parameter settings. We have provided to the current research by proposing a definition of clinical deterioration that is more subjective than other definitions but could help to predict symptoms that indicate high-risk events earlier in time than models that only look at well-defined end-points. We have also proposed to use another metric in all future research in the medical domain. This metric, the AUPRC, is more indicative of model performance when dealing with imbalanced data than the often used AUROC.